The intellectual background of the research project can be traced back to David Brady's graduate school experience at the California Institute of Technology in the 1980s. Under the direction of Demitri Psaltis, Brady explored the use of volume holography to mimick the neural processes of the human brain creating an optical perceptron - or an artificial neural network. The photo at right shows an optical neural network from Brady's work at that time. The construction of multiple grating holograms enabled the implementation of large-scale linear transformations on distributed optical fields, an advance that broke through the historical barriers holding back the potential of optical networks.

Photonic Systems Group

In 1990, David Brady accepted a position with the Beckman Institute for Advanced Science and Technology of the University of Illinois at Urbana-Champaign. It was at UIUC that the immediate predecessor of DISP came into being, namely, the Photonic Systems Group. Over time, the new group represented a change of emphasis from Brady's interests at CIT. Whereas his original research focused on the possibilities of optical computing, the new group turned its attention toward optical communication, studying ways to better collect, manage and traffic light-borne information. Over the course of the 1990s, the focus of the Photonic Systems Group underwent further development transitioning from an interest in optical means of storing information to photonic sensing and perception.

The group's initial work built on the importance of holography as a means of computer storage. In 1994, the group received a patent for its development of multilayered optical memory with means for recording and reading information. The group continued to work on optical storage, experimenting with different dyes and mediums for holography. The picture on the right is an example of a planar holographic interconnect that the group developed by the middle of the decade.

Holographic Pulse Shapers

The Photonic Systems Group continued to focus on reflection volume holograms, now using them to encode complex time domain optical fields. In 1994, the group demonstrated that volume holography could be used to encode ultra-fast three dimensional pulsed images. The spatial and temporal degrees of freedom in these images will allow direct control of space-time pattern formation in quantum dynamical processes. For example, this brief videoclip demonstrating experimental pulsed images consists of 128 frames reconstructed from repetitively sampled pulsed light scattered by a volume hologram recorded in lithium niobate. The movie spells the words "GO ILLINI". Each letter is separated from the previous one by 1 picosecond. The spatial scale of the letters is approximately 1 millimeter. The burst data rate of the image in this movie is approximately equivalent to one hundred billion parallel video channels.

In 1996, the group discovered a new approach in the fabrication of micropolarizers. These thin films contained arrays of polarizing elements that varied the polarization from pixel to pixel, a significant advance that aided the development of 3-D imaging, vision and display systems. This development also signified a new phase of cooperation between the University based research group and outside companies, in this case, the Equinox Corporation which funded the project through a sponsored research agreement with the University of Illinois.

Superresolved Optical Microscopy

Superresolved optical microscopy using polychromatic interferometric imaging is a way to collect more information about an object than a classical imaging system could. As a feature of an object becomes small compared to the wavelength of light used to view the feature, any information regarding this feature will not propagate with the field in the normal sense. In other words you will no longer be able to view this feature with your microscope no matter how strong the magnification is. However, when a person views an object with their eyes or with a camera for that matter, it is just the intensity of the field we perceive. This leaves a vital part of the information, the phase of the optical field, untouched.

The Photonic Systems Group demonstrated that the extra information provided by such a system can be used to resolve subwavelength features of an object. One use for this added information comes about when considering form dispersion. Form dispersion is the ability for a subwavelength structure to encode information in the phase of an optical field. More specifically, the subwavelength structure imparts relative phase shifts among the different spectral components of the field. Conventionally, any information associated with feature sizes smaller than one half the wavelength of the probing light decay exponentially fast with distance from the scattering feature. Thus after a short distance no feature information exists in the magnitude of the field. However, if one had access to the phase of the spectral components making up the optical field, it is possible to gain some knowledge about the scattering structure even though conventionally this is not possible. We accomplished this goal by illuminating an object with polychromatic light and extracting the relative phase shifts between the different spectral components using an interferometer.

Scanning Tunneling Microscope

In 1996 the group received a grant from the National Science Foundation for a project to study "Spatial Parallelism and Ultra-Fast Dynamics in Tunneling Microscope-Surface and Adsorbate-Surface Interactions." Scanning Tunneling Microscopy (STM) is a scanning probe technique that allows the details of a surface to be determined at a distance scale of an individual atom. Unlike a regular microscope, which collects light from different points of a surface, and uses the number of photons of light collected from each point to map surface features, the STM collects electrons from points on a surface, and similarly uses the number of electrons collected to determine the shape of surface features. A conducting tip, with a single atom at the end, is scanned over the surface, recording the electric current at each point to reconstruct the locations of atoms and electrons on the surface. The high degree of localization provided by STM provides an opportunity to study the dynamics of individual atoms on surfaces. This project represented a significant step in the development of nanotechnology - the science of engineering and constructing devices consisting of individual atoms. For nanotechnology to become a reality, scientists needed to understand how to manipulate individual atoms on surfaces and how to control of quantum mechanical systems with light.

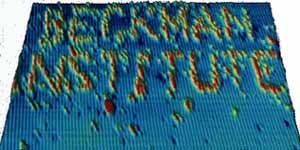

In an STM, a computer typically is sending instructions to move the tip over the surface of the sample. By scanning the tip over the surface in rows and columns, like the scanning beam inside a television, a complete image of the surface is produced, by measuring the distance from the surface the tip is when a certain number of electrons are collected. Because the movements are so fine, the instrument must be isolated from the vibrations produced by the environment. While these vibrations are tiny compared to human scale, they are enormous on the atomic scale. In addition, the tip can move atoms around if the attractive force between the tip and surface is great enough. An atom may be extracted from the surface and stuck to the tip, moved by the tip, and redeposited by charging a repulsive force between the tip and atom. This way, atoms can be arranged in various patterns. On the right is an example of nanograffiti created using the Scanning Tunneling Microscope. The image depicts a scan of individual hydrogen atoms patterned on to a Silicon [100] surface by an STM. These words are approximately ten million times smaller than the words "Beckman Institute" that you see in this sentence. Our interest is in investigating chemical processes by using the STM to image the surface, while employing light to encourage chemical reactions to occur.

Sampling Field Sensors

In the middle of the 1990s, the Photonic Systems Group began exploring issues of imaging including novel sensors and data inversion algorithms for these sensors. In 1996, group members developed the Sampling Field Sensor - an instrument that uses interference between fields drawn from different sample points to determine the phase and amplitude of an electromagnetic field. The measured field can be used to image objects and map their spatial distributions. These sensors represented a radical improvement on conventional imaging systems, which measured the intensity of a field rather than the field itself.

The Sampling Field Sensor works by applying the principles of digital sampling to optical fields. Sampling theory has been employed in engineering to create many of the modern miracles enabled by digital signal processing: high-fidelity digital music and video, wired and wireless communications, and medical imaging. These applications work because they can transform a difficult to handle analog signal into a digital form that can be transformed in nearly arbitrary ways. Once an accurate digital representation is created, the data can be efficiently decoded. However, the field of optics has not benefited nearly as much from these advances because of the relative inflexibility of optical components relative to electronic circuits. Bridging the gap between the optical and electronic representations of data would allow the best of both to be applied.

The SFS works by filtering out samples of the field, and combining these samples of the field in specific, highly controllable ways that yield the phase information in the field with a high degree of accuracy. Figure 1 shows a diagram of this principle. An optical field is incident on a "sampling stage" which selects representative portions, or samples, of the optical field to be measured. While selecting portions of the field excludes others, the samples can be processed more thoroughly by the succeeding stages. These stages are combined together using a "fan out" mechanism. The combinations of the samples produce the phase information that can not otherwise be directly detected by the receiver plane. The fan out is designed so that even if the instrument is perturbed, the effect on the measurement is minimized. These combinations of data are detected by the receiver plane, where they are converted to digital electrical signals.

Interferometric Digital Imaging

A major breakthrough in the development of these computational sensors came with the development of a tomography-based algorithm that computes a three-dimensional model from images of an object from multiple viewpoints. This method utilized cone-beam algorithms already like those used in X-ray Computed Tomography, and shows much promise for providing reliable and robust three-dimensional imaging of complex objects.

In 1998 the Photonic System Group received a grant from the Army Research Office (ARO) to create the Illinois Interferometric Imaging Initiative (4Is) - a cross-disciplinary effort building interferometric imaging systems that yield novel images for applications in 3D imaging, target acquisition, adaptive optics, and wavefront capture. Digital imaging -- images produced from computational analysis, rather than direct capture of a physical field -- while then common in radar and medicine, was less utilized in the optical, infrared and microwave ranges. Interferometry -- the method for detecting phase in the high frequency range of the electromagnetic spectrum -- had generally utilized low spatial frequency devices comparing relatively few samples. Through this project the group developed highly parallel imaging interferometers that combine interferometric measurements with digital analysis to yield images which capture all the information in the field and which contain more useful object information than conventional systems.

One aspect of the program that received widespread attention was the lensless capture of three dimensional objects. Science magazine in June 1999 featured the research with full color illustrations of the toy dinosaur reconstructed by the inferometry. Two brief movies display the possibilities of this technology. The first is a three dimensional reconstruction of a dinosaur which is rotated to demonstrate all angles of the image capture. The second depicts a toy moon man being similarly rotated.

An additional breakthrough came with the invention of the Quantum Dot Spectrometer - an instrument that replicates the function of interferometric spectrometers in a point detector. This invention joined the spectroscopic sensitivity of optical absorption in quantum dots with electrical readout to produce spectrally sensitive detectors. In contrast to conventional electronic detectors, which produce signals proportional to the intensity of an optical signal, the invention produces signals proportional to the power spectral density of the signal.

Argus

This interest in three-dimensional imaging stimulated the development of the Argus project -- a parallel computer that can acquire real-time data from a large number of cameras situated at multiple viewpoints, and compute in parallel the three-dimensional reconstruction from these viewpoints using the developed cone-beam algorithms. Situated in a special room in which all of the cameras viewed a central subject, Argus demonstrated the feasibility of real-time three-dimensional reconstruction optimizing the quality of the reconstructed data. The project made use of Beowulf clusters, a unique network of Linux-based PCs connected by a high-speed network as a parallel computer, providing parallel data acquisition that would be difficult with a conventional computing system.

The Argus system was designed to produce a telepresence that combines computer imaging and visualization technologies to produce highly interactive virtual environments. This technology allows remote users to interact and experience local events as if they were physically located in the environment through the use of a dense camera array, which is capable of capturing information from all angles around the imaging space. Each camera is attached to a node in a computational cluster, effectively producing an array of sensor-processors. The group developed two distinct approaches to achieve the goal of telepresence. In our model-based approach we used a tomographic algorithm to generate complete three-dimensional models of the physical environment. Remote users can experience the imaging space by viewing these models. In the view-based approach, the remote user interacts directly with the Argus imaging environment. Instead of generating views based on a representation of the space, we have developed algorithms that generate only the requested view for the user. An example of Argus' capabilities can be viewed in two short movies. The first depicts a dancer using the model-based approach. The second is a view-based representation of the photonics group.

Distant Focus

In 1997, the Photonic Systems group received its first corporate expression with the founding of Distant Focus, a company specializing in optical systems, computer automation, specialty optical sensors and sensor networks, and distributed processing environments. In 2001, Distant Focus subcontracted with UIUC to complete the Defense Advanced Research Projects Agency (DARPA) funded study "Tomographic Imaging on Distributed Unattended Ground Sensor Arrays". This research was proposed and directed by Dr. David Brady and was initially carried out by the Photonic Systems group at the Beckman Institute whose core members later formed Distant Focus. The project was nicknamed MEDUSA, which stands for "Mobile Distributed Unattended Sensor Arrays". The goal of this program was to develop unattended sensors and sensor arrays that accurately and robustly track targets using 3-D tomographic techniques. An early example of this type of sensor, dubbed Morpheus, can be seen to the left. In principle, the more information -- acoustic, optical, magnetic and seismic -- the sensor array detects, the easier this task becomes.

Fitzpatrick Center

Distant Focus took over the Medusa program because the Photonic System Group was on the move. In December of 2000, a generous gift from Michael and Patty Fitzpatrick funded a new interdisciplinary approach to scientific inquiry at Duke University called the Fitzpatrick Center for Photonics and Communication Systems. David Brady accepted the invitation to become the director of this new center and moved to the Research Triangle in 2001. Many members of the Illinois research team came with him and the Photonic System Group became DISP.

The change in physical location offered the opportunity to take old research interests into new directions. An original goal of the Argus project to relay the three-dimensional data to the CAVE virtual reality environment at the National Center for Supercomputing Applications. This relay would allow a CAVE user to view a real-time three-dimensional reconstruction of a scene at a remote location, with the ability to view from all angles and positions. The new building constructed at Duke called the Fitzpatrick Center for Interdisciplinary Engineering, Medicine and Applied Sciences (CIEMAS) contained its own CAVE environment to facilitate the interdisciplinary research.

Optopo/Centice

In 2004, group members, David Brady, Mike Sullivan and Prasant Potuluri won the $50,000 top prize and a $5,000 People's Choice award in the Duke Startup Challenge for their new computational optical sensors company dubbed Optopo. Now called Centice, this start-up represents a new means of marketing and commercializing the spectrometer and sensor discoveries originally developed by the DISP group. Centice has taken its place in the growing photonics industry alongside other prominent corporations in the Research Triangle of North Carolina.

Telescope Interferometry

DISP developed an array of rotational shear interferometers attached to telescopes for joint target triangulation and estimation from spatio-spectral tracking. Conventional telescopes have limited power to resolve small objects in orbit due to atmospheric aberrations. Systems with adaptive optics can correct for atmospheric aberrations, but are generally very expensive. We constructed an alternative system based on coherence imaging for tracking and identification of objects in orbit. The system uses coherence imaging to track and identify objects in orbit spectrally. Rather than imaging the structure of the object in orbit, our system measures the object's spectral signature while simultaneously providing location information for the object.

The archived Telescope Interferometry project page has more information.

Biometric Sensing

DISP constructed a distributed sensor detection system that used a combination of video cameras and infrared point detectors to detect the position and pose of a human subject. Video sensors are bandwidth hogs, and were only used to calibrate the infrared system. Also using RST technology, we were able to determine human pose with only a hundred distributed IR sensors, rather than the millions of pixels used in conventional systems.

The DISP group used motion sensing pyroelectric detectors along with Fresnel lens arrays which are commonly found in home/office security systems. The detectors detect motion within its field-of-view by detecting changes in the heat distribution that occurs when a person moves. The group developed custom sensor platforms having multiple sensing channels, each channel having a unique modulation code through the Fresnel lens arrays, as well as a wire/wireless communication system to stream out the sensor data. A network of these sensor platforms were deployed in the specially developed Sensor Studio in the CIEMAS building. The amount of data being produced from a sensor platform was in kilobytes; a small fraction of the megabytes or gigabytes of data from video based systems. Telepresence was demonstrated by tracking the motion of a person in the studio and relaying the coordinates to the DIVE that displayed a mock-up of the sensor studio and the current location of the person being tracked. Another extension to this program was biometric identification which relied on the unique gait (or heat signatures) of different individuals. By training the system to recognize certain features in the temporal signals from the sensors, our group demonstrated tracking and identification of people with very high accuracy.

The archived Biometric Sensing project page has more information.

Spectroscopy Project

In this project the DISP group developed diffuse source spectrometers for biological sensors for both the detection of dangerous chemical and biological substances and the non-invasive detection of chemical elements in the human bloodstream. Putting the methodology of Raman Spectroscopy into action, this work was a step towards developing a sensor to detect alcohol in the bloodstream without requiring sophisticated, but invasive, blood examinations or less reliable breathalyzer tests.

The archived Spectroscopy project page has more information.

COMP-I Initiative

COMP-I (Compressive Optical MONTAGE Photography Initiative) was a project funded by DARPA's MONTAGE program. The goal of COMP-I was to dramatically reduce the volume and form factor of visible and infrared imaging systems without loss of resolution. The COMP-I approach also yields substantial advantages in color and data management in imaging systems. A prototype camera from the COMP-I project is shown in the picture on the right.

The archived COMP-I project page has more information.

Multi-aperture Imaging Systems

Evolving from the COMP-I (Compressive Optical MONTAGE Photography Initiative), the DISP Multi-Aperture Imaging Project focused on new applications for these systems. Initially, thin imaging was the primary motivator, and arrays of short focal length lenses were used to miniaturize cameras. This work led to the exploriation of the use of multi-aperture imaging for extended depth of field and increased FOV (field of view). A shorter focal length lens will inherently have a greater depth of field. We also examined more advanced designs such as combining lenses of differing focal lengths on a single array and orienting lenses to point in different directions to increased system FOV to improve the limited FOV of conventional cameras.

The archived Multi-aperture project page has more information.

Tissue Spectroscopy

This project involved using aperture coded systems for to improvde the photon collection efficiency of conventional Raman spesctroscopy systems. The systems developed for this project differ from conventional slit-based spectrometers by replacing the input slit with a mathematically well-defined code. The binary-valued codes, such as the Hadamard matrices, have a higher throughput in comparison to conventional systems without sacrificing resolution. Since at the focal plane of a computational sensor, we measure a convolution between the source and input aperture, inversion becomes a computational problem. Some inversion techniques have included iterative algorithms such as nonnegative least squares (NNLS), expectation maximization and principle component analysis (PCA) and multiple linear regression (MLR) for concentration estimation of target Raman active signals in tissue phantoms. The Hadamard-mask multiple-channel Raman spectrometer can be used as a portable, low-cost, and non-invasive solution for biomedical diagnostics.

The archived Tissue Spectroscopy page has more information.

Compressive Holography

The compressive holograph project was able to demonstrate that decompressive inference enables 3D tomography from a single 2D monochromatic digital hologram. In compressive sensing, sparse signals in some basis, sampled by multiplex encodings, may be accurately infered with high probability using many fewer measurements than suggested by Shannons sampling theorem. Holography has general advantages in compressive optical imaging since it is an interferometric modality in which both the amplitude and the phase of a field can be obtained. The complex-valued encodings may provide a more direct application of compressive sensing.

The archived Compressive Holography page has more information.

Computational Spectral Microscopy

We have extended the use of high throughput spectrometers to spectral microscopy applications. A pushbroom scanned high-throughput spectrometer on the back-end of a microscope creates a three dimensional cube (2D spatial, 1D spectral) from a fluorescent fixed-cellular assay. Current system designs include a snapshot dual disperser (DD-CASSI) architecture. Our goal includes imaging dynamic cellular events using fluorescence microscopy as a tool. The benefits of these computational spectral imagers applied to microscopy include high throughput analysis and a higher spectral measurement when compared to conventional spectral imaging systems.

Ths archived Computational Spectral Microscopy page has more information.

AWARE WIDE FIELD OF VIEW IMAGING

The AWARE Wide Field of View Imaging Project uses a multi-scale design technique to develop large format imaging systems that significantly exceed the SWAP constraints of conventional imaging systems. Through the use of mononcentric optical designs and using microcameras as a general optical processing unit, we are developing designs that scale from one gigapixel to 40 gigapixels and greater.

MULTI-FRAME CODED APERTURE SNAPSHOT SPECTRAL IMAGING

Multiframe Coded Aperture Spectral Imagers are based off the CASSI system, but modulate the coded aperture between every snapshot to increase the rank of the system matrix. For static scenes, this greatly enhances the reconstruction quality with only slight computational and acquistion overhead

MILLIMETER WAVE AND TERAHERTZ IMAGING

Millimeter Wave and Terahertz Imaging is of great interest for its large penetration depth at non-ionizing energy levels. Current projects in millimeter wave consist of compressive holography and interferometric synthetic aperture imaging.

CODED APERTURE X-RAY IMAGING

Coded aperture x-ray imaging enables molecular specificity and measurement efficiency in x-ray tomography systems.

COMPUTATIONAL MASS SPECTROSCOPY

As described in US Patent 7,399,957, DISP is building compressive mass spectroscopy systems.

KNOWLEDGE ENHANCED EXAPIXEL PHOTOGRAPHY

Under the DARPA Knowledge Enhanced Compressive Measurement (KECoM) program, DISP is developing image space coding strategies to radically reduce electronic power cost in high pixel count cameras. Preliminary results under this program are described in Coding for Compressive Focal Tomography by D. J. Brady and D. L. Marks

The Future of DISP?

When David Brady arrived at UIUC in 1990, the Chicago Tribune featured him in article that touted the possibility of optical computing, but his subsequent research with the Photonic Systems Group took him in a very different direction. The future of DISP is similarly hard to predict. The research projects currently being conducted have the potential of expanding into multiple, but presently undiscovered directions. What does the future hold for the group? Only time and the talented minds of the DISP can tell...